In the dynamic intersection of cloud computing, artificial intelligence (AI), and the advent of Large Language Models (LLMs), organizations are increasingly challenged with maximizing efficiency while harnessing the power of transformative technologies. This article explores the synergies between Turbonomic, Cloud FinOps, AI cost optimization, and the nuanced world of Large Language Models, delving into a sample use case that highlights the importance of prompt tuning and engineering in this complex landscape.

Understanding the Landscape

Cloud FinOps guides organizations in managing the cost of cloud computing effectively, ensuring financial accountability and transparency. Simultaneously, the rise of AI, especially with Large Language Models, introduces intricate workloads with associated costs. AI cost optimization strategies become crucial in balancing the innovation AI promises with the fiscal responsibility organizations demand.

The Role of Turbonomic in the Synergy

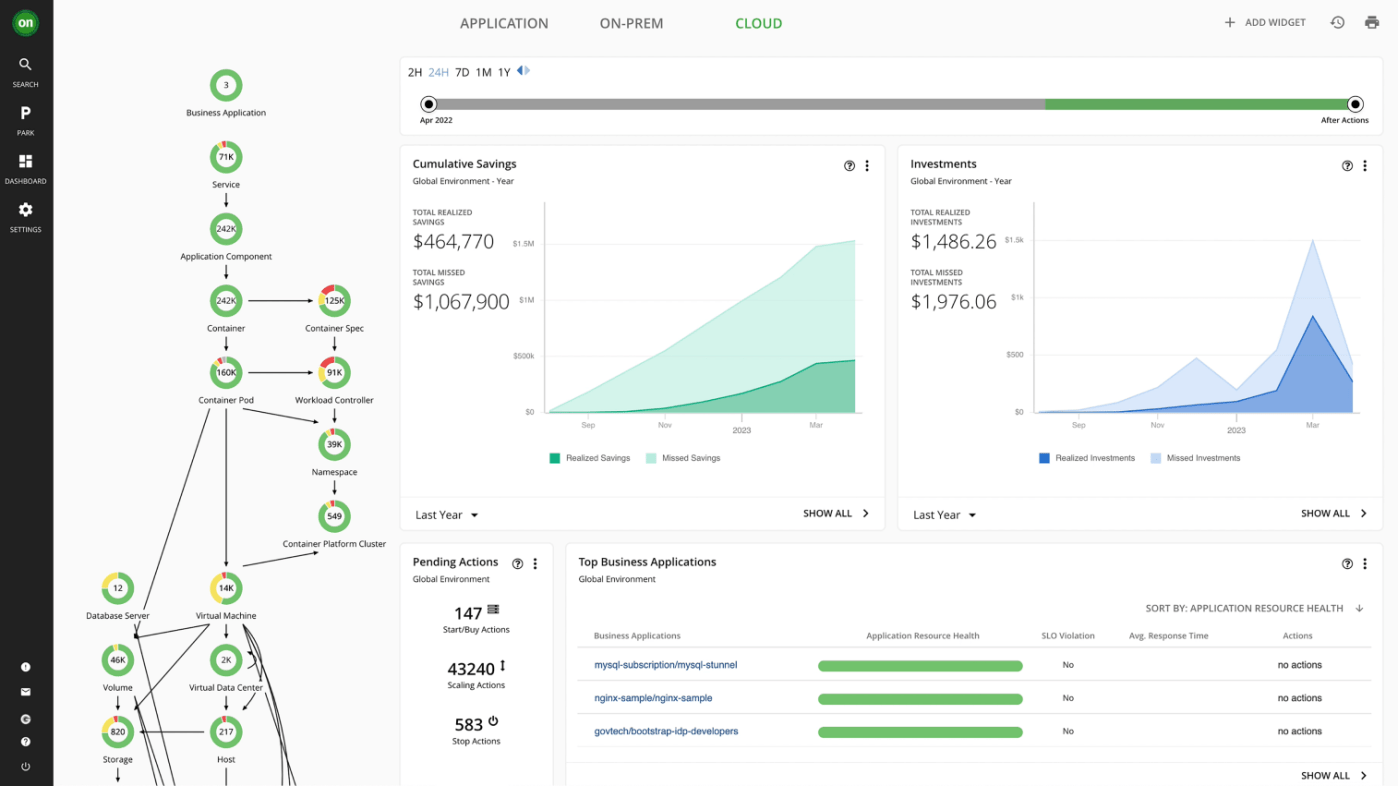

Enter Turbonomic, an intelligent workload management platform designed for cloud and hybrid environments. It automates resource management, leverages AI-powered decision-making, ensures continuous optimization, and provides unified visibility into resource utilization and costs.

Sample Usecase: Large Language Models, Prompt Tuning, and AI Cost Optimization

Consider an organization leveraging a Large Language Model for natural language understanding, text generation, and content creation. This organization faces the challenge of optimizing the costs associated with training and inference for the Large Language Model, while ensuring optimal performance.

**1. Automated Resource Management with Turbonomic:

Turbonomic automatically assesses the resource needs of the Large Language Model, adjusting allocations in real-time. This ensures that computational resources are dynamically optimized, minimizing unnecessary costs and maximizing efficiency.

2. AI-Powered Decision-Making for Large Language Models:

The AI-powered decision-making capabilities of Turbonomic extend to the nuanced requirements of Large Language Models. Machine learning algorithms predict future resource needs, allowing for proactive adjustments that align with the evolving demands of language processing tasks.

3. Continuous Optimization for Large Language Models:

Large Language Models often require iterative improvements and adaptations. Turbonomic’s continuous optimization ensures that the infrastructure supporting the model remains aligned with changing requirements, preventing unnecessary costs associated with over-provisioning or underutilization.

4. Unified Visibility into Large Language Model Costs:

Turbonomic provides unified visibility, offering detailed reports on resource utilization and associated costs specific to the Large Language Model. This empowers organizations with insights for informed decision-making and strategic budget allocation.

Prompt Tuning and Engineering: A Crucial Component

In the realm of Large Language Models, especially those using prompt-based approaches, prompt tuning and engineering become pivotal. Organizations can influence the behavior of the model by refining the input prompts, fine-tuning them to achieve desired outputs. Turbonomic, in synergy with Cloud FinOps and AI cost optimization, provides the necessary infrastructure support for prompt tuning experiments.

Benefits of the Unified Approach

The unified approach of Turbonomic, Cloud FinOps, and AI cost optimization in the context of Large Language Models brings forth a host of benefits:

- Cost-Efficient Large Language Models: Turbonomic ensures that computational resources allocated to Large Language Models are optimized for cost-efficiency, preventing unnecessary expenditures.

- Proactive Adaptation to Workload Changes: The AI-powered decision-making capabilities of Turbonomic enable proactive adjustments to the infrastructure, ensuring that Large Language Models adapt to changing workloads seamlessly.

- Visibility and Control: Unified visibility into costs and resource utilization empowers organizations with the control needed to manage Large Language Models effectively within budgetary constraints.

Conclusion: Mastering Efficiency in the Age of LLMs

As organizations navigate the complexities of cloud computing, AI, and Large Language Models, the synergies between Turbonomic, Cloud FinOps, and AI cost optimization emerge as a strategic imperative. Through the lens of a sample use case involving Large Language Models, this unified approach showcases the orchestration of efficiency, providing a roadmap for organizations seeking to balance innovation with financial responsibility in this dynamic landscape.

Leave a comment